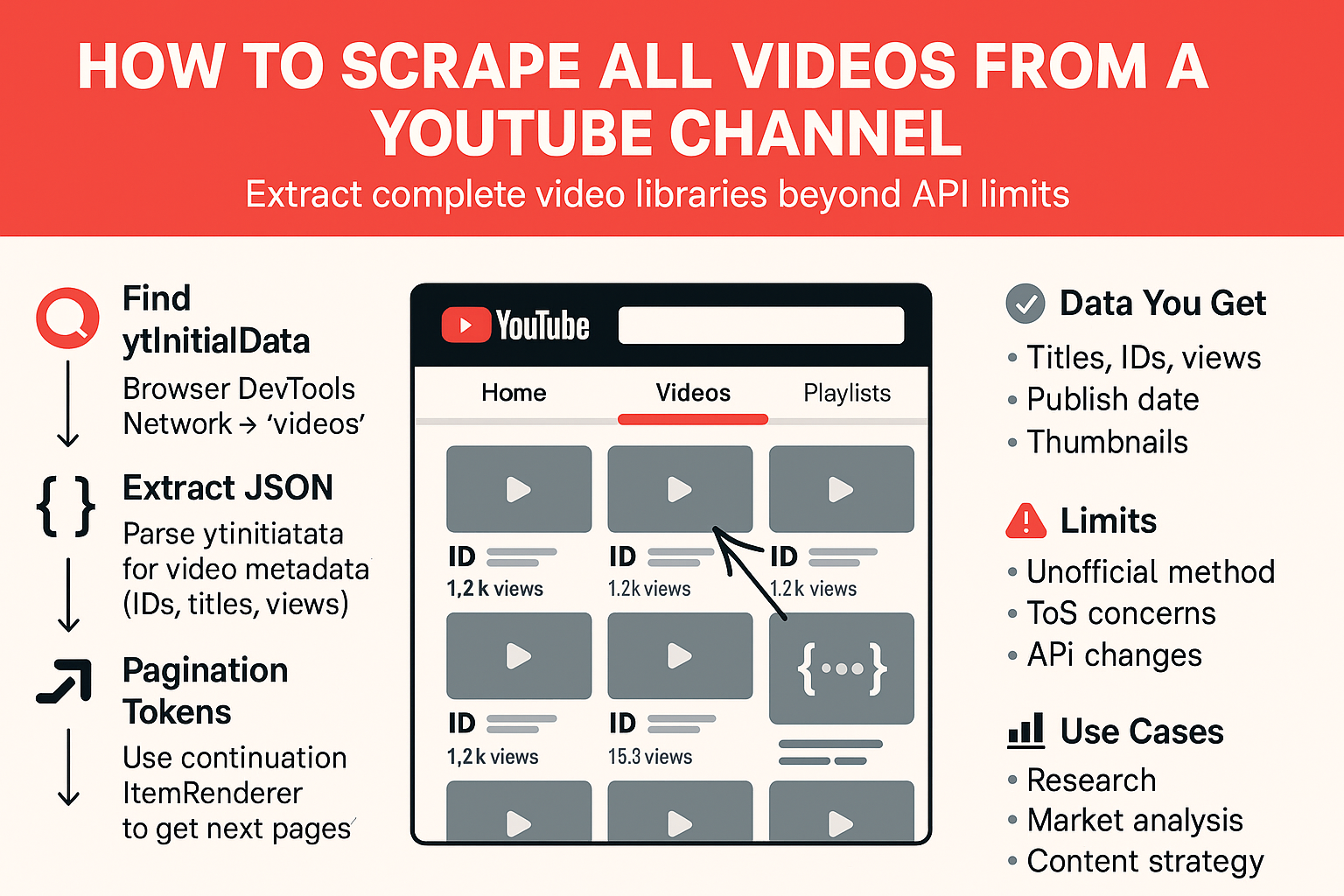

Want to extract all videos from a YouTube channel for analysis, research, or competitive intelligence?

While YouTube's official API has strict quotas and limitations, there's a more direct approach using the same endpoints that power YouTube's web interface.

Here's how to scrape any channel's complete video library.

Understanding YouTube's Data Structure

YouTube's web interface loads video data through internal API calls that we can reverse-engineer.

The key is understanding that YouTube embeds initial page data in a JavaScript object called ytInitialData, then uses continuation tokens for pagination.

Let's walk through the process using Starter Story's channel as an example, though this method works for any YouTube channel.

Step 1: Finding the Initial Data

Start by navigating to any YouTube channel's videos page. Open your browser's developer tools (F12), go to the Network tab, and refresh the page. Look for the first request named "videos". This contains all the initial video data.

In the response, search for "ytInitialData". This JavaScript object contains the structured data that powers the entire videos page, including video titles, IDs, thumbnails, and view counts.

To verify you've found the right data, search for one of the visible video titles within this object.

Step 2: Extracting and Parsing the Data

The ytInitialData is embedded as a string within a script tag, so we need to extract and parse it into usable JSON. Using a library like Cheerio in Node.js, locate the script tag containing ytInitialData and extract the JSON portion:

const start = ytInitialDataString.indexOf("{");

const end = ytInitialDataString.lastIndexOf("}") + 1;

const jsonData = JSON.parse(ytInitialDataString.substring(start, end));Step 3: Navigating YouTube's Nested Structure

YouTube's JSON structure is notoriously complex, but there's a pattern: everything important is wrapped in "renderer" objects. For video listings, you need to find the "tabRenderer" where the title equals "Videos".

Once you locate this tab renderer, the actual videos array is nested at content.richGridRenderer.contents. This array contains all the video data for the current page, with each video wrapped in a "videoRenderer" object containing metadata like videoId, title, view count, and publication date.

Step 4: Handling Pagination

The initial page only shows the first batch of videos. To get subsequent pages, YouTube uses continuation tokens. Clear your browser console, filter by "Fetch/XHR", and scroll down on the videos page to trigger loading more content.

You'll see a request to an endpoint called "browse?prettyPrint=false". This is YouTube's pagination API. Click on this request and examine the response. You'll find the newly loaded videos in the same structure as before.

Step 5: Understanding Continuation Tokens

The magic happens through continuation tokens. In the original videos array, the last item isn't a video at all, it's a "continuationItemRenderer" containing a token. This token is what you pass to the browse endpoint to get the next page of videos.

Right-click on the browse request and select "Copy as Fetch (Node.js)" to see the exact headers and payload structure YouTube expects. The continuation token goes in the POST body along with other required parameters.

Step 6: Building the Complete Solution

Here's the process flow for scraping all videos from a channel:

- Initial Request: Load the channel's videos page and extract

ytInitialData - Parse Videos: Extract video data from

content.richGridRenderer.contents - Get Token: Find the continuation token from the last item in the contents array

- Paginate: Use the token to request the next page via the browse endpoint

- Repeat: Continue until no more continuation tokens are available

Technical Implementation Tips

When implementing this approach, keep several factors in mind:

- Headers Matter: YouTube checks for specific headers including User-Agent, cookies, and referrer information. Copy the exact headers from your browser's network tab.

- Rate Limiting: Don't hammer YouTube's servers. Implement delays between requests to avoid triggering anti-bot measures.

- Error Handling: YouTube's responses can vary, and continuation tokens sometimes expire. Build robust error handling for edge cases.

- Data Validation: Always verify that the expected data structure exists before trying to parse it, as YouTube occasionally changes their internal formats.

Parsing Video Metadata

Each video renderer contains rich metadata:

- videoId: Unique identifier for building video URLs

- title: Video title with formatting preserved

- thumbnails: Multiple resolution options for video previews

- viewCountText: Human-readable view counts

- publishedTimeText: Relative publication dates

- lengthText: Video duration information

This data enables comprehensive analysis of channel content, posting patterns, and performance metrics.

Limitations and Considerations

This reverse-engineering approach has important limitations:

- Unofficial Method: YouTube could change their internal API structure at any time, breaking your scraper

- Terms of Service: Ensure your usage complies with YouTube's terms and doesn't violate their policies

- Scale Constraints: Large-scale scraping may trigger anti-bot measures or IP blocking

- Maintenance Overhead: Internal APIs change more frequently than public ones

Practical Applications

This scraping technique enables various applications:

- Competitive Analysis: Monitor competitors' content strategies, posting frequencies, and performance trends

- Market Research: Analyze content patterns across industry channels to identify successful formats

- Content Planning: Study high-performing videos in your niche to inform your own content strategy

- Academic Research: Gather data for studies on digital media, content creation, or platform dynamics

Conclusion

Scraping YouTube channel data requires understanding both the technical implementation and the broader context of web scraping ethics and sustainability. While the reverse-engineering approach provides deep insight into how YouTube structures their data, production applications often benefit from purpose-built APIs that handle the complexity and maintenance overhead.