Building a reliable, scalable scraping infrastructure is more art than science. After running a production scraping service that handles thousands of requests daily, here's everything I've learned about architecting a system that actually works in the real world, not just in development.

The Foundation: Keep It Simple, Keep It Stable

The core principle behind any successful scraping operation is simplicity. Complex architectures might look impressive in system design interviews, but they become nightmares when you're debugging scraping issues at 2 AM.

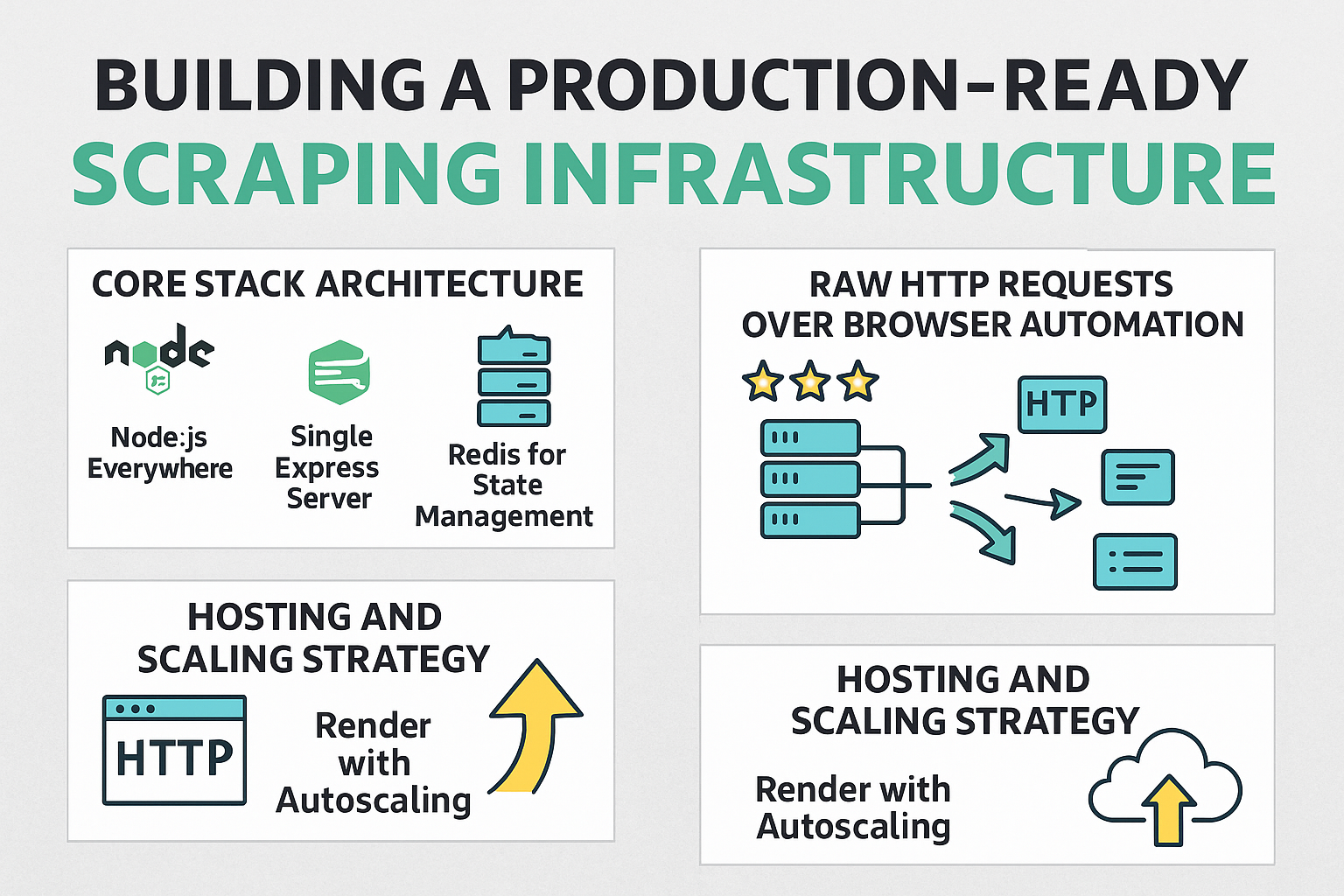

Core Stack Architecture

- Node.js Everywhere: The entire system runs on Node.js, providing consistency across all components and eliminating context switching between different runtime environments.

- Single Express Server: Rather than microservices or distributed architectures, everything runs through one Express server that manages all scraping endpoints. This approach reduces complexity while maintaining enough modularity to scale individual components.

- Redis for State Management: Redis handles two critical functions: managing user credits and reducing database load through intelligent caching. This setup prevents the database from becoming a bottleneck during traffic spikes.

The beauty of this architecture lies in its predictability. When something breaks (and it will), you have fewer moving parts to diagnose and fewer potential failure points to investigate.

The Real Challenge: Proxy Infrastructure

Here's what no tutorial tells you about scraping: the code is the easy part. The hard part is maintaining reliable proxy infrastructure that keeps your scrapers running consistently.

The Three-Tier Proxy Strategy

- Static Residential Proxies: The gold standard for most scraping operations. These proxies appear as genuine residential IP addresses and rarely get blocked by target sites. They're expensive but provide the highest success rates.

- Rotating Residential Proxies: Essential for high-volume operations where you need fresh IP addresses for each request. These automatically rotate through large pools of residential IPs, making detection much harder.

- Datacenter Proxies: The backup plan. While more likely to be detected and blocked, datacenter proxies are fast and cheap. They're perfect for initial testing and as fallbacks when residential proxies fail.

Proxy Provider Evolution

The proxy landscape changes constantly, and provider reliability can shift overnight. Initially, Evomi provided adequate service, but as scaling requirements increased, reliability became more critical than cost savings.

The migration to Decodo represented a classic scaling trade-off: higher costs in exchange for significantly better reliability. When you're running a production service, a few extra dollars per thousand requests is negligible compared to the cost of downtime and failed scraping attempts.

Technical Implementation: Speed and Efficiency

Raw HTTP Requests Over Browser Automation

The temptation to use browser automation tools like Puppeteer for everything is strong, especially when you're starting out. Browsers feel familiar and handle JavaScript rendering automatically.

However, raw HTTP requests are typically 10-100x faster and consume far fewer resources. Most scraping targets don't require full browser rendering – you just need to understand their API endpoints and request patterns.

When to Use HTTP Requests:

- API endpoints that return JSON data

- Simple HTML pages without complex JavaScript

- High-volume operations where speed matters

- Cost-sensitive applications

When Browser Automation Is Necessary:

- JavaScript-heavy applications that render content dynamically

- Sites with complex anti-bot measures

- Endpoints that require user interaction simulation

For the current setup, Puppeteer is reserved exclusively for specific TikTok Shop endpoints that require browser rendering. Everything else uses direct HTTP requests.

Hosting and Scaling Strategy

Current Setup: Render with Autoscaling

Render provides a middle ground between traditional VPS hosting and full cloud infrastructure. The autoscaling capabilities handle most traffic variations automatically, spinning up new instances when demand increases.

Render Advantages:

- Automatic deployments from Git

- Built-in scaling without infrastructure management

- Reasonable pricing for moderate traffic

- Simplified monitoring and logging

Render Limitations:

- Cold start delays can cause temporary 502 errors during traffic spikes

- Less control over scaling parameters compared to AWS

- Higher per-request costs at scale

Next Evolution: AWS Lambda

The migration to AWS Lambda represents the next logical step for true instant scaling. Lambda's pay-per-request model aligns perfectly with scraping workloads, where traffic can be highly variable.

Lambda Benefits for Scraping:

- Zero cold start impact on users (Lambda handles instance management)

- Perfect cost alignment (pay only for actual usage)

- Infinite scaling capacity

- Integrated monitoring and error tracking

The main challenge will be adapting the current architecture to Lambda's stateless model, particularly around proxy session management and Redis connections.

Why This Architecture Works

Node.js Event Loop Advantage

Scraping is inherently I/O intensive – you're constantly waiting for network requests to complete. Node.js's event loop model excels at this workload, efficiently managing thousands of concurrent requests without the overhead of thread management.

Traditional multi-threaded approaches would require significantly more memory and CPU resources to handle the same throughput.

Redis as the Performance Multiplier

Database queries can quickly become bottlenecks in high-traffic scraping applications. Redis serves as both a cache and a fast data store for frequently accessed information:

- Credit Management: User credit balances are stored in Redis, eliminating database queries for every scraping request.

- Rate Limiting: Request throttling data stays in Redis for instant access during request validation.

- Caching: Frequently requested scraping results can be cached temporarily, reducing load on target sites and improving response times.

Proxy Reliability as Infrastructure

Treating proxy management as core infrastructure rather than an afterthought prevents the most common scraping failures. When your proxies work consistently, everything else becomes much more manageable.

Operational Lessons Learned

Solo Maintainability

Complex architectures require teams to maintain effectively. As a solo operation, keeping the system simple enough for one person to understand and debug is crucial.

This means:

- Clear, documented code patterns

- Minimal external dependencies

- Straightforward deployment processes

- Comprehensive error logging and monitoring

Scaling Decisions

Each scaling decision involves trade-offs between cost, complexity, and reliability. The key is making these decisions based on actual constraints rather than theoretical performance concerns.

Moving from Evomi to Decodo wasn't about finding cheaper proxies – it was about eliminating a reliability bottleneck that was affecting user experience.

Monitoring and Alerting

Production scraping systems fail in interesting ways. Effective monitoring goes beyond basic uptime checks:

- Success Rate Tracking: Monitor scraping success rates by endpoint and proxy provider

- Response Time Analysis: Track performance degradation before it becomes user-visible

- Error Pattern Recognition: Identify when target sites change their anti-scraping measures

- Resource Utilization: Monitor proxy usage and credit consumption patterns

Future Architecture Considerations

Microservices vs. Monolith

While the current monolithic approach works well, certain components might benefit from separation as scale increases:

- Proxy Management Service: Dedicated service for proxy health monitoring and rotation

- Credit Management Service: Separate billing and usage tracking from core scraping logic

- Queue Management: Background job processing for long-running scraping tasks

Database Strategy

The current setup relies heavily on Redis with a traditional database for persistent storage. As data volume grows, consider:

- Time-series Databases: For scraping analytics and performance metrics

- Document Stores: For flexible storage of scraped content with varying structures

- Data Warehousing: For historical analysis and business intelligence

Implementation Best Practices

Error Handling and Retries

Scraping operations fail regularly, and your architecture must account for this reality:

- Exponential Backoff: Gradual retry delays prevent overwhelming target sites

- Circuit Breakers: Stop attempting requests when failure rates exceed thresholds

- Fallback Strategies: Multiple proxy providers and endpoints for critical operations

Security Considerations

Production scraping services face unique security challenges:

- API Rate Limiting: Prevent abuse while maintaining legitimate access

- Proxy IP Protection: Avoid exposing proxy infrastructure to potential attackers

- Data Privacy: Handle scraped content responsibly and in compliance with regulations

Cost Optimization

Scraping costs can escalate quickly without careful management:

- Proxy Cost Monitoring: Track usage patterns and optimize proxy allocation

- Caching Strategies: Reduce redundant scraping through intelligent caching

- Request Optimization: Minimize unnecessary requests through better endpoint design